Much of the pain I’ve seen and experienced with agentic coding has been due to “obviously-LLM’d” code. Some functions suddenly don’t follow the codebase code conventions at all, there is unused stub code or tests, or code is suddenly hammered with out-of-place comments distinct from previous coding style. This is especially a problem with agentic coding, since it goes beyond just autocompleting with an LLM or refactoring smaller pieces of code.

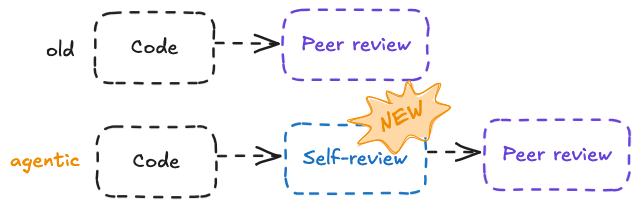

My hunch is that introducing agentic coding as such a fundamental piece of code production work warrants a new self-review step in the code review process that no established work agreements (implicit or not) know how to deal with. It’s somehow deeply ingrained that writing the code means that knowing all the context and lines written, and thus it can be directly pushed for peers to review, whereas code written by an AI needs to be equivalently reviewed.

Peer reviewing kinda sucks

I’m personally not a big fan of reviewing code from other people. It requires a huge amount of context switching, understanding how each new part of the code functions with each other, and it usually does not feel like it directly benefits my own ongoing tasks.

Still, it is a core part of the job and essential for both knowledge sharing and code/product quality reasons.

With LLM agents, it feels easy to think that they have replaced the code part of development while keeping everything else the same. But increasingly I don’t this is true. Rather, the review process needs to be adapted to more formally add reading the LLM-produced code yourself.

Self-review

tbd tbd tbd

how to do this as well as peer review is done currently? tooling?